Reachability: Difference between revisions

DAGs and partial orders |

Thatsme314 (talk | contribs) m →Floyd-Warshall Algorithm: hyphen vs en dash |

||

| (38 intermediate revisions by 28 users not shown) | |||

| Line 1: | Line 1: | ||

{{Short description|Whether one vertex can be reached from another in a graph}} |

|||

{{Citations missing|date=October 2009}} |

|||

In [[graph theory]], '''reachability''' is the notion of being able to get from one [[Vertex (graph theory)|vertex]] in a [[directed graph]] to some other vertex. Note that reachability in undirected graphs is trivial — it is sufficient to find the [[connected components]] in the graph, which can be done in linear time. |

|||

In [[graph theory]], '''reachability''' refers to the ability to get from one [[Vertex (graph theory)|vertex]] to another within a graph. A vertex <math>s</math> can reach a vertex <math>t</math> (and <math>t</math> is reachable from <math>s</math>) if there exists a sequence of [[Glossary of graph theory#Basics|adjacent]] vertices (i.e. a [[Path (graph theory)|walk]]) which starts with <math>s</math> and ends with <math>t</math>. |

|||

In an undirected graph, reachability between all pairs of vertices can be determined by identifying the [[Connected component (graph theory)|connected components]] of the graph. Any pair of vertices in such a graph can reach each other [[iff|if and only if]] they belong to the same connected component; therefore, in such a graph, reachability is symmetric (<math>s</math> reaches <math>t</math> [[iff]] <math>t</math> reaches <math>s</math>). The connected components of an undirected graph can be identified in linear time. The remainder of this article focuses on the more difficult problem of determining pairwise reachability in a [[directed graph]] (which, incidentally, need not be symmetric). |

|||

== Definition == |

== Definition == |

||

For a directed graph |

For a directed graph <math>G = (V, E)</math>, with vertex set <math>V</math> and edge set <math>E</math>, the reachability [[Relation (mathematics)|relation]] of <math>G</math> is the [[transitive closure]] of <math>E</math>, which is to say the set of all ordered pairs <math>(s,t)</math> of vertices in <math>V</math> for which there exists a sequence of vertices <math>v_0 = s, v_1, v_2, ..., v_k = t</math> such that the edge <math>(v_{i-1},v_i)</math> is in <math>E</math> for all <math>1 \leq i \leq k</math>.<ref name="skiena">{{citation |

||

| last = Skiena | first = Steven S. |

|||

| contribution = 15.5 Transitive Closure and Reduction |

|||

| edition = 2nd |

|||

| isbn = 9781848000698 |

|||

| pages = 495–497 |

|||

| publisher = Springer |

|||

| title = The Algorithm Design Manual |

|||

| url = https://books.google.com/books?id=7XUSn0IKQEgC&pg=PA495 |

|||

| year = 2011}}.</ref> |

|||

If <math>G</math> is [[directed acyclic graph|acyclic]], then its reachability relation is a [[partial order]]; any partial order may be defined in this way, for instance as the reachability relation of its [[transitive reduction]].<ref>{{citation |

|||

==DAGs and partial orders== |

|||

| last = Cohn | first = Paul Moritz |

|||

The reachability relation of a [[directed acyclic graph]] is a [[partial order]]; any partial order may be defined in this way, for instance as the reachability relation of its [[transitive reduction]]. |

|||

| isbn = 9781852335878 |

|||

| page = 17 |

|||

| publisher = Springer |

|||

| title = Basic Algebra: Groups, Rings, and Fields |

|||

| url = https://books.google.com/books?id=VESm0MJOiDQC&pg=PA17 |

|||

| year = 2003}}.</ref> A noteworthy consequence of this is that since partial orders are anti-symmetric, if <math>s</math> can reach <math>t</math>, then we know that <math>t</math> ''cannot'' reach <math>s</math>. Intuitively, if we could travel from <math>s</math> to <math>t</math> and back to <math>s</math>, then <math>G</math> would contain a [[cycle (graph theory)|cycle]], contradicting that it is acyclic. |

|||

If <math>G</math> is directed but ''not'' acyclic (i.e. it contains at least one cycle), then its reachability relation will correspond to a [[preorder]] instead of a partial order.<ref>{{citation |

|||

| last = Schmidt | first = Gunther |

|||

| isbn = 9780521762687 |

|||

| page = 77 |

|||

| publisher = Cambridge University Press |

|||

| series = Encyclopedia of Mathematics and Its Applications |

|||

| title = Relational Mathematics |

|||

| url = https://books.google.com/books?id=E4dREBTs5WsC&pg=PA559 |

|||

| volume = 132 |

|||

| year = 2010}}.</ref> |

|||

== Algorithms == |

== Algorithms == |

||

Algorithms for reachability fall into two classes: those that require [[preprocessing]] and those that do not |

Algorithms for determining reachability fall into two classes: those that require [[Data pre-processing|preprocessing]] and those that do not. |

||

If you have only one (or a few) queries to make, it may be more efficient to forgo the use of more complex data structures and compute the reachability of the desired pair directly. This can be accomplished in [[linear time]] using algorithms such as [[breadth first search]] or [[iterative deepening depth-first search]].<ref>{{citation |

|||

| last = Gersting | first = Judith L. | author-link = Judith Gersting |

|||

| edition = 6th |

|||

| isbn = 9780716768647 |

|||

| page = 519 |

|||

| publisher = Macmillan |

|||

| title = Mathematical Structures for Computer Science |

|||

| url = https://books.google.com/books?id=lvAo3AeJikQC&pg=PA519 |

|||

| year = 2006}}.</ref> |

|||

If you will be making many queries, then a more sophisticated method may be used; the exact choice of method depends on the nature of the graph being analysed. In exchange for preprocessing time and some extra storage space, we can create a data structure which can then answer reachability queries on any pair of vertices in as low as <math>O(1)</math> time. Three different algorithms and data structures for three different, increasingly specialized situations are outlined below. |

|||

=== Floyd–Warshall Algorithm === |

|||

The [[Floyd–Warshall algorithm]]<ref>{{citation |

|||

| last1 = Cormen | first1 = Thomas H. | author1-link = Thomas H. Cormen |

|||

| last2 = Leiserson | first2 = Charles E. | author2-link = Charles E. Leiserson |

|||

| last3 = Rivest | first3 = Ronald L. | author3-link = Ronald L. Rivest |

|||

| last4 = Stein | first4 = Clifford | author4-link = Clifford Stein |

|||

| contribution = Transitive closure of a directed graph |

|||

| edition = 2nd |

|||

| isbn = 0-262-03293-7 |

|||

| pages = 632–634 |

|||

| publisher = MIT Press and McGraw-Hill |

|||

| title = [[Introduction to Algorithms]] |

|||

| year = 2001}}.</ref> can be used to compute the transitive closure of any directed graph, which gives rise to the reachability relation as in the definition, above. |

|||

The algorithm requires <math>O(|V|^3)</math> time and <math>O(|V|^2)</math> space in the worst case. This algorithm is not solely interested in reachability as it also computes the shortest path distance between all pairs of vertices. For graphs containing negative cycles, shortest paths may be undefined, but reachability between pairs can still be noted. |

|||

=== Thorup's Algorithm === |

|||

For [[Planar Graph|planar]] [[Directed graph|digraphs]], a much faster method is available, as described by [[Mikkel Thorup]] in 2004.<ref>{{citation |

|||

| last = Thorup | first = Mikkel | author-link = Mikkel Thorup |

|||

| doi = 10.1145/1039488.1039493 |

|||

| issue = 6 |

|||

| journal = [[Journal of the ACM]] |

|||

| mr = 2145261 |

|||

| pages = 993–1024 |

|||

| title = Compact oracles for reachability and approximate distances in planar digraphs |

|||

| volume = 51 |

|||

| year = 2004| s2cid = 18864647 }}.</ref> This method can answer reachability queries on a planar graph in <math>O(1)</math> time after spending <math>O(n \log{n})</math> preprocessing time to create a data structure of <math>O(n \log{n})</math> size. This algorithm can also supply approximate shortest path distances, as well as route information. |

|||

The overall approach is to associate with each vertex a relatively small set of so-called separator paths such that any path from a vertex <math>v</math> to any other vertex <math>w</math> must go through at least one of the separators associated with <math>v</math> or <math>w</math>. An outline of the reachability related sections follows. |

|||

Given a graph <math>G</math>, the algorithm begins by organizing the vertices into layers starting from an arbitrary vertex <math>v_0</math>. The layers are built in alternating steps by first considering all vertices reachable ''from'' the previous step (starting with just <math>v_0</math>) and then all vertices which reach ''to'' the previous step until all vertices have been assigned to a layer. By construction of the layers, every vertex appears at most two layers, and every [[Path (graph theory)#Different types of paths|directed path]], or dipath, in <math>G</math> is contained within two adjacent layers <math>L_i</math> and <math>L_{i+1}</math>. Let <math>k</math> be the last layer created, that is, the lowest value for <math>k</math> such that <math>\bigcup_{i=0}^{k} L_i = V</math>. |

|||

The graph is then re-expressed as a series of digraphs <math>G_0, G_1, \ldots, |

|||

G_{k-1}</math> where each <math>G_i = r_i \cup L_i \cup L_{i+1}</math> and where <math>r_i</math> is the contraction of all previous levels <math>L_0 \ldots L_{i-1}</math> into a single vertex. Because every dipath appears in at most two consecutive layers, and because |

|||

each <math>G_i</math> is formed by two consecutive layers, every dipath in <math>G</math> appears in its entirety in at least one <math>G_i</math> (and no more than 2 consecutive such graphs) |

|||

For each <math>G_i</math>, three separators are identified which, when removed, break the graph into three components which each contain at most <math>1/2</math> the vertices of the original. As <math>G_i</math> is built from two layers of opposed dipaths, each separator may consist of up to 2 dipaths, for a total of up to 6 dipaths over all of the separators. Let <math>S</math> be this set of dipaths. The proof that such separators can always be found is related to the [[Planar Separator Theorem]] of Lipton and Tarjan, and these separators can be located in linear time. |

|||

For each <math>Q \in S</math>, the directed nature of <math>Q</math> provides for a natural indexing of its vertices from the start to the end of the path. For each vertex <math>v</math> in <math>G_i</math>, we locate the first vertex in <math>Q</math> reachable by <math>v</math>, and the last vertex in <math>Q</math> that reaches to <math>v</math>. That is, we are looking at how early into <math>Q</math> we can get from <math>v</math>, and how far |

|||

we can stay in <math>Q</math> and still get back to <math>v</math>. This information is stored with |

|||

each <math>v</math>. Then for any pair of vertices <math>u</math> and <math>w</math>, <math>u</math> can reach <math>w</math> ''via'' <math>Q</math> if <math>u</math> connects to <math>Q</math> earlier than <math>w</math> connects from <math>Q</math>. |

|||

Every vertex is labelled as above for each step of the recursion which builds |

|||

<math>G_0 \ldots, G_k</math>. As this recursion has logarithmic depth, a total of |

|||

<math>O(\log{n})</math> extra information is stored per vertex. From this point, a |

|||

logarithmic time query for reachability is as simple as looking over each pair |

|||

of labels for a common, suitable <math>Q</math>. The original paper then works to tune the |

|||

query time down to <math>O(1)</math>. |

|||

In summarizing the analysis of this method, first consider that the layering |

|||

approach partitions the vertices so that each vertex is considered only <math>O(1)</math> |

|||

times. The separator phase of the algorithm breaks the graph into components |

|||

which are at most <math>1/2</math> the size of the original graph, resulting in a |

|||

logarithmic recursion depth. At each level of the recursion, only linear work |

|||

is needed to identify the separators as well as the connections possible between |

|||

vertices. The overall result is <math>O(n \log n)</math> preprocessing time with only |

|||

<math>O(\log{n})</math> additional information stored for each vertex. |

|||

=== Kameda's Algorithm === |

|||

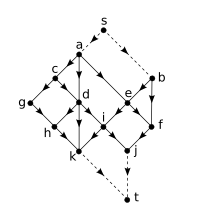

[[File:Graph suitable for Kameda's method.svg|thumb|right|200px|A suitable digraph for Kameda's method with <math>s</math> and <math>t</math> added.]] |

|||

[[File:Kameda's algorithm run.svg|thumb|right|200px|The same graph as above after Kameda's algorithm has run, showing the DFS labels for each vertex]] |

|||

An even faster method for pre-processing, due to T. Kameda in 1975,<ref>{{citation |

|||

| last = Kameda | first = T |

|||

| journal = [[Information Processing Letters]] |

|||

| volume = 3 |

|||

| number = 3 |

|||

| pages = 75–77 |

|||

| title = On the vector representation of the reachability in planar directed graphs |

|||

| year = 1975 |

|||

| doi=10.1016/0020-0190(75)90019-8}}.</ref> |

|||

can be used if the graph is [[Planar graph|planar]], [[Directed acyclic graph|acyclic]], and also exhibits the following additional properties: all 0-[[Directed graph#Indegree and outdegree|indegree]] and all 0-[[Directed graph#Indegree and outdegree|outdegree]] vertices appear on the same [[Glossary of graph theory#face|face]] (often assumed to be the outer face), and it is possible to partition the boundary of that face into two parts such that all 0-indegree vertices appear on one part, and all |

|||

0-outdegree vertices appear on the other (i.e. the two types of vertices do not alternate). |

|||

If <math>G</math> exhibits these properties, then we can preprocess the graph in only |

|||

<math>O(n)</math> time, and store only <math>O(\log{n})</math> extra bits per vertex, answering |

|||

reachability queries for any pair of vertices in <math>O(1)</math> time with a simple |

|||

comparison. |

|||

Preprocessing performs the following steps. We add a new vertex <math>s</math> which has an edge to each 0-indegree vertex, and another new vertex <math>t</math> with edges from each 0-outdegree vertex. Note that the properties of <math>G</math> allow us to do so while maintaining planarity, that is, there will still be no edge crossings after these additions. For each vertex we store the list of adjacencies (out-edges) in order of the planarity of the graph (for example, clockwise with respect to the graph's embedding). We then initialize a counter <math>i = n + 1</math> and begin a Depth-First Traversal from <math>s</math>. During this traversal, the adjacency list of each vertex is visited from left-to-right as needed. As vertices are popped from the traversal's stack, they are labelled with the value <math>i</math>, and <math>i</math> is then decremented. Note that <math>t</math> is always labelled with the value <math>n+1</math> and <math>s</math> is always labelled with <math>0</math>. The depth-first traversal is then repeated, but this time the adjacency list of each vertex is visited from right-to-left. |

|||

When completed, <math>s</math> and <math>t</math>, and their incident edges, are removed. Each |

|||

remaining vertex stores a 2-dimensional label with values from <math>1</math> to <math>n</math>. |

|||

Given two vertices <math>u</math> and <math>v</math>, and their labels <math>L(u) = (a_1, a_2)</math> and <math>L(v) =(b_1, b_2)</math>, we say that <math>L(u) < L(v)</math> if and only if <math>a_1 \leq b_1</math>, <math>a_2 \leq |

|||

b_2</math>, and there exists at least one component <math>a_1</math> or <math>a_2</math> which is strictly |

|||

less than <math>b_1</math> or <math>b_2</math>, respectively. |

|||

The main result of this method then states that <math>v</math> is reachable from <math>u</math> if and |

|||

only if <math>L(u) < L(v)</math>, which is easily calculated in <math>O(1)</math> time. |

|||

== Related problems == |

|||

A related problem is to solve reachability queries with some number <math>k</math> of vertex failures. For example: "Can vertex <math>u</math> still reach vertex <math>v</math> even though vertices <math>s_1, s_2, ..., s_k</math> have failed and can no longer be used?" A similar problem may consider edge failures rather than vertex failures, or a mix of the two. The breadth-first search technique works just as well on such queries, but constructing an efficient oracle is more challenging.<ref>{{citation |

|||

Typically algorithms for reachability that require preprocessing (and their corresponding data structures) are called [[oracles]] (similarly there are oracles for distance and approximate distance queries). |

|||

| last1 = Demetrescu | first1 = Camil |

|||

| last2 = Thorup | first2 = Mikkel | author2-link = Mikkel Thorup |

|||

| last3 = Chowdhury | first3 = Rezaul Alam |

|||

| last4 = Ramachandran | first4 = Vijaya |

|||

| doi = 10.1137/S0097539705429847 |

|||

| issue = 5 |

|||

| journal = [[SIAM Journal on Computing]] |

|||

| mr = 2386269 |

|||

| pages = 1299–1318 |

|||

| title = Oracles for distances avoiding a failed node or link |

|||

| volume = 37 |

|||

| year = 2008| citeseerx = 10.1.1.329.5435}}.</ref><ref>{{citation |

|||

| last1 = Halftermeyer | first1 = Pierre |

|||

| title = Connectivity in Networks and Compact Labeling Schemes for Emergency Planning |

|||

| location = Universite de Bordeaux |

|||

| url = https://tel.archives-ouvertes.fr/tel-01110316/document }}.</ref> |

|||

Another problem related to reachability queries is in quickly recalculating changes to reachability relationships when some portion of the graph is changed. For example, this is a relevant concern to [[Garbage collection (computer science)|garbage collection]] which needs to balance the reclamation of memory (so that it may be reallocated) with the performance concerns of the running application. |

|||

== Node failures == |

|||

An interesting related problem is to solve reachability queries with some number ''k'' of node failures. For example, can node ''u'' still reach node ''v'' even though nodes ''s<sub>1</sub>'', ..., ''s<sub>k</sub>'' have failed and can no longer be used? The breadth-first search technique works just as well on such queries, but constructing an efficient oracle is more challenging. |

|||

== See also == |

== See also == |

||

* [[Gammoid]] |

|||

* [[St-connectivity|''st''-connectivity]] |

* [[St-connectivity|''st''-connectivity]] |

||

* [[Petri net]] |

|||

==References== |

|||

{{math-stub}} |

|||

{{reflist}} |

|||

[[Category:Graph connectivity]] |

[[Category:Graph connectivity]] |

||

[[de:Erreichbarkeit]] |

|||

Latest revision as of 12:28, 26 June 2023

In graph theory, reachability refers to the ability to get from one vertex to another within a graph. A vertex can reach a vertex (and is reachable from ) if there exists a sequence of adjacent vertices (i.e. a walk) which starts with and ends with .

In an undirected graph, reachability between all pairs of vertices can be determined by identifying the connected components of the graph. Any pair of vertices in such a graph can reach each other if and only if they belong to the same connected component; therefore, in such a graph, reachability is symmetric ( reaches iff reaches ). The connected components of an undirected graph can be identified in linear time. The remainder of this article focuses on the more difficult problem of determining pairwise reachability in a directed graph (which, incidentally, need not be symmetric).

Definition

[edit]For a directed graph , with vertex set and edge set , the reachability relation of is the transitive closure of , which is to say the set of all ordered pairs of vertices in for which there exists a sequence of vertices such that the edge is in for all .[1]

If is acyclic, then its reachability relation is a partial order; any partial order may be defined in this way, for instance as the reachability relation of its transitive reduction.[2] A noteworthy consequence of this is that since partial orders are anti-symmetric, if can reach , then we know that cannot reach . Intuitively, if we could travel from to and back to , then would contain a cycle, contradicting that it is acyclic. If is directed but not acyclic (i.e. it contains at least one cycle), then its reachability relation will correspond to a preorder instead of a partial order.[3]

Algorithms

[edit]Algorithms for determining reachability fall into two classes: those that require preprocessing and those that do not.

If you have only one (or a few) queries to make, it may be more efficient to forgo the use of more complex data structures and compute the reachability of the desired pair directly. This can be accomplished in linear time using algorithms such as breadth first search or iterative deepening depth-first search.[4]

If you will be making many queries, then a more sophisticated method may be used; the exact choice of method depends on the nature of the graph being analysed. In exchange for preprocessing time and some extra storage space, we can create a data structure which can then answer reachability queries on any pair of vertices in as low as time. Three different algorithms and data structures for three different, increasingly specialized situations are outlined below.

Floyd–Warshall Algorithm

[edit]The Floyd–Warshall algorithm[5] can be used to compute the transitive closure of any directed graph, which gives rise to the reachability relation as in the definition, above.

The algorithm requires time and space in the worst case. This algorithm is not solely interested in reachability as it also computes the shortest path distance between all pairs of vertices. For graphs containing negative cycles, shortest paths may be undefined, but reachability between pairs can still be noted.

Thorup's Algorithm

[edit]For planar digraphs, a much faster method is available, as described by Mikkel Thorup in 2004.[6] This method can answer reachability queries on a planar graph in time after spending preprocessing time to create a data structure of size. This algorithm can also supply approximate shortest path distances, as well as route information.

The overall approach is to associate with each vertex a relatively small set of so-called separator paths such that any path from a vertex to any other vertex must go through at least one of the separators associated with oder . An outline of the reachability related sections follows.

Given a graph , the algorithm begins by organizing the vertices into layers starting from an arbitrary vertex . The layers are built in alternating steps by first considering all vertices reachable from the previous step (starting with just ) and then all vertices which reach to the previous step until all vertices have been assigned to a layer. By construction of the layers, every vertex appears at most two layers, and every directed path, or dipath, in is contained within two adjacent layers and . Let be the last layer created, that is, the lowest value for such that .

The graph is then re-expressed as a series of digraphs where each and where is the contraction of all previous levels into a single vertex. Because every dipath appears in at most two consecutive layers, and because each is formed by two consecutive layers, every dipath in appears in its entirety in at least one (and no more than 2 consecutive such graphs)

For each , three separators are identified which, when removed, break the graph into three components which each contain at most the vertices of the original. As is built from two layers of opposed dipaths, each separator may consist of up to 2 dipaths, for a total of up to 6 dipaths over all of the separators. Let be this set of dipaths. The proof that such separators can always be found is related to the Planar Separator Theorem of Lipton and Tarjan, and these separators can be located in linear time.

For each , the directed nature of provides for a natural indexing of its vertices from the start to the end of the path. For each vertex in , we locate the first vertex in reachable by , and the last vertex in that reaches to . That is, we are looking at how early into we can get from , and how far we can stay in and still get back to . This information is stored with each . Then for any pair of vertices and , can reach via if connects to earlier than connects from .

Every vertex is labelled as above for each step of the recursion which builds . As this recursion has logarithmic depth, a total of extra information is stored per vertex. From this point, a logarithmic time query for reachability is as simple as looking over each pair of labels for a common, suitable . The original paper then works to tune the query time down to .

In summarizing the analysis of this method, first consider that the layering approach partitions the vertices so that each vertex is considered only times. The separator phase of the algorithm breaks the graph into components which are at most the size of the original graph, resulting in a logarithmic recursion depth. At each level of the recursion, only linear work is needed to identify the separators as well as the connections possible between vertices. The overall result is preprocessing time with only additional information stored for each vertex.

Kameda's Algorithm

[edit]

An even faster method for pre-processing, due to T. Kameda in 1975,[7] can be used if the graph is planar, acyclic, and also exhibits the following additional properties: all 0-indegree and all 0-outdegree vertices appear on the same face (often assumed to be the outer face), and it is possible to partition the boundary of that face into two parts such that all 0-indegree vertices appear on one part, and all 0-outdegree vertices appear on the other (i.e. the two types of vertices do not alternate).

If exhibits these properties, then we can preprocess the graph in only time, and store only extra bits per vertex, answering reachability queries for any pair of vertices in time with a simple comparison.

Preprocessing performs the following steps. We add a new vertex which has an edge to each 0-indegree vertex, and another new vertex with edges from each 0-outdegree vertex. Note that the properties of allow us to do so while maintaining planarity, that is, there will still be no edge crossings after these additions. For each vertex we store the list of adjacencies (out-edges) in order of the planarity of the graph (for example, clockwise with respect to the graph's embedding). We then initialize a counter and begin a Depth-First Traversal from . During this traversal, the adjacency list of each vertex is visited from left-to-right as needed. As vertices are popped from the traversal's stack, they are labelled with the value , and is then decremented. Note that is always labelled with the value and is always labelled with . The depth-first traversal is then repeated, but this time the adjacency list of each vertex is visited from right-to-left.

When completed, and , and their incident edges, are removed. Each remaining vertex stores a 2-dimensional label with values from to . Given two vertices and , and their labels and , we say that if and only if , , and there exists at least one component oder which is strictly less than oder , respectively.

The main result of this method then states that is reachable from if and only if , which is easily calculated in time.

Related problems

[edit]A related problem is to solve reachability queries with some number of vertex failures. For example: "Can vertex still reach vertex even though vertices have failed and can no longer be used?" A similar problem may consider edge failures rather than vertex failures, or a mix of the two. The breadth-first search technique works just as well on such queries, but constructing an efficient oracle is more challenging.[8][9]

Another problem related to reachability queries is in quickly recalculating changes to reachability relationships when some portion of the graph is changed. For example, this is a relevant concern to garbage collection which needs to balance the reclamation of memory (so that it may be reallocated) with the performance concerns of the running application.

See also

[edit]References

[edit]- ^ Skiena, Steven S. (2011), "15.5 Transitive Closure and Reduction", The Algorithm Design Manual (2nd ed.), Springer, pp. 495–497, ISBN 9781848000698.

- ^ Cohn, Paul Moritz (2003), Basic Algebra: Groups, Rings, and Fields, Springer, p. 17, ISBN 9781852335878.

- ^ Schmidt, Gunther (2010), Relational Mathematics, Encyclopedia of Mathematics and Its Applications, vol. 132, Cambridge University Press, p. 77, ISBN 9780521762687.

- ^ Gersting, Judith L. (2006), Mathematical Structures for Computer Science (6th ed.), Macmillan, p. 519, ISBN 9780716768647.

- ^ Cormen, Thomas H.; Leiserson, Charles E.; Rivest, Ronald L.; Stein, Clifford (2001), "Transitive closure of a directed graph", Introduction to Algorithms (2nd ed.), MIT Press and McGraw-Hill, pp. 632–634, ISBN 0-262-03293-7.

- ^ Thorup, Mikkel (2004), "Compact oracles for reachability and approximate distances in planar digraphs", Journal of the ACM, 51 (6): 993–1024, doi:10.1145/1039488.1039493, MR 2145261, S2CID 18864647.

- ^ Kameda, T (1975), "On the vector representation of the reachability in planar directed graphs", Information Processing Letters, 3 (3): 75–77, doi:10.1016/0020-0190(75)90019-8.

- ^ Demetrescu, Camil; Thorup, Mikkel; Chowdhury, Rezaul Alam; Ramachandran, Vijaya (2008), "Oracles for distances avoiding a failed node or link", SIAM Journal on Computing, 37 (5): 1299–1318, CiteSeerX 10.1.1.329.5435, doi:10.1137/S0097539705429847, MR 2386269.

- ^ Halftermeyer, Pierre, Connectivity in Networks and Compact Labeling Schemes for Emergency Planning, Universite de Bordeaux.