Distributed shared memory: Difference between revisions

m →Disadvantages of DSM: slight corrections |

Rescuing 2 sources and tagging 0 as dead.) #IABot (v2.0.9.5 |

||

| (47 intermediate revisions by 30 users not shown) | |||

| Line 1: | Line 1: | ||

{{Refimprove|date=January 2022}} |

|||

{{redirect|DGAS|the DGA awards|Directors Guild of America Award}} |

{{redirect|DGAS|the DGA awards|Directors Guild of America Award}} |

||

In [[computer science]], '''distributed shared memory''' ('''DSM''') is a form of memory architecture where physically separated memories can be addressed as one logically shared address space. Here, the term "shared" does not mean that there is a single centralized memory, but that the address space is "shared" (same physical address on two processors refers to the same location in memory).<ref>{{cite book |

|||

In [[computer science]], '''distributed shared memory''' ('''DSM''') is a form of [[memory architecture]] where physically separated memories can be addressed as a single shared [[address space]]. The term "shared" does not mean that there is a single centralized memory, but that the address space is shared—i.e., the same physical address on two [[Processor (computing)|processors]] refers to the same location in memory.<ref>{{cite book |

|||

| last1 = Patterson |

| last1 = Patterson |

||

| first1 = David A. |

| first1 = David A. |

||

| |

| author-link1 = David Patterson (computer scientist) |

||

| last2 = Hennessy |

| last2 = Hennessy |

||

| first2 = John L. |

| first2 = John L. |

||

| |

| author-link2 = John L. Hennessy |

||

| title = Computer Architecture: A Quantitative Approach |

| title = Computer Architecture: A Quantitative Approach |

||

| edition = 4th |

| edition = 4th |

||

| Line 13: | Line 15: | ||

| year = 2006 |

| year = 2006 |

||

| isbn = 978-01-2370490-0 |

| isbn = 978-01-2370490-0 |

||

}}</ref>{{rp|201}} '''Distributed global address space''' ('''DGAS'''), is a similar term for a wide class of software and hardware implementations, in which each [[node (networking)|node]] of a [[computer cluster|cluster]] has access to [[shared memory architecture|shared memory]] in addition to each node's |

}}</ref>{{rp|201}} '''Distributed global address space''' ('''DGAS'''), is a similar term for a wide class of software and hardware implementations, in which each [[node (networking)|node]] of a [[computer cluster|cluster]] has access to [[shared memory architecture|shared memory]] in addition to each node's private (i.e., not shared) [[Random-access memory|memory]]. |

||

== Overview == |

|||

A distributed-memory system ,often called a multicomputer, consists of multiple independent processing nodes with local memory modules which is connected by a general interconnection network. Software DSM systems can be implemented in an [[operating system]], or as a programming library and can be thought of as extensions of the underlying [[virtual memory]] architecture. When implemented in the operating system, such systems are transparent to the developer; which means that the underlying [[distributed memory]] is completely hidden from the users. In contrast, software DSM systems implemented at the library or language level are not transparent and developers usually have to program them differently. However, these systems offer a more portable approach to DSM system implementations. A distributed shared memory system implements the shared-memory model on a physically distributed memory system. |

|||

[[File:DSM FIGURE.jpg|330px|right]] |

|||

A distributed-memory system, often called a [[multicomputer]], consists of multiple independent processing nodes with local memory modules which is connected by a general interconnection network. Software DSM systems can be implemented in an [[operating system]], or as a programming library and can be thought of as extensions of the underlying [[virtual memory]] architecture. When implemented in the operating system, such systems are transparent to the developer; which means that the underlying [[distributed memory]] is completely hidden from the users. In contrast, software DSM systems implemented at the library or language level are not transparent and developers usually have to program them differently. However, these systems offer a more portable approach to DSM system implementations. A DSM system implements the [[shared-memory]] model on a physically distributed memory system. |

|||

== Methods of Achieving DSM == |

|||

There are usually two methods of achieving distributed shared memory: |

|||

* hardware, such as cache coherence circuits and network interfaces |

|||

* software |

|||

DSM can be achieved via software as well as hardware. Hardware examples include [[cache coherence]] circuits and [[network interface controller]]s. There are three ways of implementing DSM: |

|||

==Software DSM Implementation== |

|||

* [[Page (computer memory)|Page]]-based approach using virtual memory |

|||

There are three ways of implementing a software distributed shared memory: |

|||

* |

* Shared-variable approach using routines to access shared variables |

||

* Object-based approach, ideally accessing shared data through object-oriented discipline |

|||

* shared variable approach using some routines to access shared variables; |

|||

* object based approach ideally accessing shared data through object-oriented discipline. |

|||

===Advantages=== |

|||

== Issues in Implementing DSM Software == |

|||

* Scales well with a large number of nodes |

|||

* Reduce delays |

|||

* Message passing is hidden |

|||

* Data is replicated or cached |

|||

* Can handle complex and large databases without replication or sending the data to processes |

|||

* Semantics for concurrent access must be clearly specified |

|||

* Generally cheaper than using a multiprocessor system |

|||

* DSM is controlled by memory management software, the operating system, or language run-time system |

|||

* Provides large virtual memory space |

|||

* Locating remote data |

|||

* Programs are more portable due to common programming interfaces |

|||

* Granularity: how much data is transferred in a single operation? |

|||

* Shield programmers from sending or receiving primitives |

|||

===Disadvantages=== |

|||

* Generally slower to access than non-distributed shared memory |

|||

* Must provide additional protection against simultaneous accesses to shared data |

|||

* May incur a performance penalty |

|||

* Little programmer control over actual messages being generated |

|||

* Programmers need to understand consistency models to write correct programs |

|||

===Comparison with message passing === |

|||

==Message Passing vs. DSM== |

|||

{| class="wikitable" |

{| class="wikitable" |

||

|- |

|- |

||

| Line 59: | Line 66: | ||

[[Shared memory architecture]] may involve separating memory into shared parts distributed amongst nodes and main memory; or distributing all memory between nodes. A [[coherence protocol]], chosen in accordance with a [[consistency model]], maintains [[memory coherence]]. |

[[Shared memory architecture]] may involve separating memory into shared parts distributed amongst nodes and main memory; or distributing all memory between nodes. A [[coherence protocol]], chosen in accordance with a [[consistency model]], maintains [[memory coherence]]. |

||

==Directory memory coherence== |

|||

==Abstract View of DSM== |

|||

[[Memory coherence]] is necessary such that the system which organizes the DSM is able to track and maintain the state of data blocks in nodes across the memories comprising the system. A directory is one such mechanism which maintains the state of cache blocks moving around the system. |

|||

[[File:DSM FIGURE.jpg|center|DSM FIGURE]] |

|||

==Advantages of DSM== |

|||

* Scales well with a large number of nodes |

|||

* Message passing is hidden |

|||

* Can handle complex and large data bases without replication or sending the data to processes |

|||

* Generally cheaper than using a multiprocessor system |

|||

* Provides large virtual memory space |

|||

* Programs are more portable due to common programming interfaces |

|||

* Shield programmers from sending or receiving primitives |

|||

==Disadvantages of DSM== |

|||

* Could cause a performance penalty |

|||

* May not provide protection against simultaneous accesses to shared data |

|||

==Directory Memory Coherence== |

|||

Memory coherence is necessary such that the system which organizes the DSM is able to track and maintain the state of data blocks in nodes across the memories comprising the system. |

|||

=== States === |

=== States === |

||

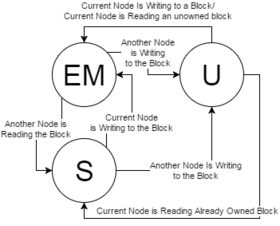

[[File:Dsm states.png|right|280px|State diagram of a block of memory in a DSM. A block is "owned" if one of the nodes has the block in state EM.]] |

|||

A basic DSM will track three states among nodes for any given block in the directory. There will be some state to dictate the block as uncached (U), a state to dictate a block as exclusively owned or modified owned (EM), and a state to dictate a block as shared (S). As blocks come into the directory organization, they will transition from U to EM (ownership state) in the initial node, then state can transition to S when other nodes begin reading the block. |

|||

There are a two primary methods for allowing the system to track where blocks are cached and in what condition across each node. Home-centric request-response uses the home to service requests and drive states, whereas requester-centric allows each node to drive and manage it's own requests through the home. |

|||

[[File:Dsm states.png|thumb|State diagram of a block of memory in a DSM. A block is "owned" if one of the nodes has the block in state EM.]] |

|||

=== Home-centric Request and Response === |

|||

In a home-centric system, the DSM will avoid having to handle request-response races between nodes by allowing only one transaction to occur at a time until the home node has decided that the transaction is finished. |

|||

A basic DSM will track at least three states among nodes for any given block in the directory.<ref>{{Cite book|title=Fundamentals of Parallel Multicore Architecture|last=Solihin|first=Yan|publisher=Chapman and Hall/CRC|year=2015|isbn=9781482211184|location=Boca Raton, Florida|pages=339–340}}</ref> There will be some state to dictate the block as uncached (U), a state to dictate a block as exclusively owned or modified owned (EM), and a state to dictate a block as shared (S). As blocks come into the directory organization, they will transition from U to EM (ownership state) in the initial node. The state can transition to S when other nodes begin reading the block. |

|||

==== Advantages ==== |

|||

* Data races are impossible |

|||

* Simple to implement |

|||

==== Disadvantages ==== |

|||

* Slow, buffered request-response strategy, limited by the home node |

|||

There are two primary methods for allowing the system to track where blocks are cached and in what condition across each node. Home-centric request-response uses the home to service requests and drive states, whereas requester-centric allows each node to drive and manage its own requests through the home. |

|||

=== Requester-centric Request and Response === |

|||

In a requester-centric system, the DSM will allow nodes to talk at will to each other through the home. This means that multiple transactions can be attempting to go at once, but this requires additional considerations to ensure consistency. For example: when one node is processing a block, if it receives a request for that block from another node it will send a NAck (Negative Acknowledgement) to tell the initiator that the processing node can't fulfill that request right away. |

|||

=== Home-centric request and response === |

|||

==== Advantages ==== |

|||

In a home-centric system, the DSM will avoid having to handle request-response races between nodes by allowing only one transaction to occur at a time until the home node has decided that the transaction is finished—usually when the home has received every responding processor's response to the request. An example of this is Intel's [[Intel QuickPath Interconnect|QPI]] home-source mode.<ref name=":0">{{cite book |

|||

* Fast |

|||

| last1 = Sorin |

|||

| last2 = Hill |

|||

| last3 = Wood |

|||

| first1 = Daniel J. |

|||

| first2 = Mark D. |

|||

| first3 = David A. |

|||

| title = A Primer on Memory Consistency and Cache Coherence |

|||

| publisher = Morgan & Claypool |

|||

| year = 2011 |

|||

| page = 174 |

|||

| isbn = 978-16-0845564-5 |

|||

}}</ref> The advantages of this approach are that it's simple to implement but its request-response strategy is slow and buffered due to the home node's limitations. |

|||

=== Requester-centric request and response === |

|||

==== Disadvantages ==== |

|||

In a requester-centric system, the DSM will allow nodes to talk at will to each other through the home. This means that multiple nodes can attempt to start a transaction, but this requires additional considerations to ensure coherence. For example: when one node is processing a block, if it receives a request for that block from another node it will send a NAck (Negative Acknowledgement) to tell the initiator that the processing node can't fulfill that request right away. An example of this is Intel's QPI snoop-source mode.<ref name=":0" /> This approach is fast but it does not naturally prevent race conditions and generates more bus traffic. |

|||

* Does not naturally prevent race conditions |

|||

* Generates more bus traffic |

|||

== Consistency |

== Consistency models == |

||

The DSM must follow certain rules to maintain consistency over how read and write order is viewed among nodes, called the system's ''[[consistency model]]''. |

The DSM must follow certain rules to maintain consistency over how read and write order is viewed among nodes, called the system's ''[[consistency model]]''. |

||

Suppose we have ''n'' processes and ''Mi'' memory operations for each process ''i'', and that all the operations are executed sequentially. We can conclude that (''M1'' + ''M2'' + … + ''Mn'')!/(''M1''! ''M2''!… ''Mn''!) are possible |

Suppose we have ''n'' processes and ''Mi'' memory operations for each process ''i'', and that all the operations are executed sequentially. We can conclude that (''M1'' + ''M2'' + … + ''Mn'')!/(''M1''! ''M2''!… ''Mn''!) are possible interleavings of the operations. The issue with this conclusion is determining the correctness of the interleaved operations. Memory coherence for DSM defines which interleavings are permitted. |

||

[[File:Sequential invocations and responses in DSM.jpg| |

[[File:Sequential invocations and responses in DSM.jpg|380px|right|Sequential invocations and responses in DSM]] |

||

==Replication |

==Replication== |

||

There are two types of replication Algorithms. Read replication and Write replication. |

|||

In Read replication multiple nodes can read at the same time but only one node can write. |

|||

In Write replication multiple nodes can read and write at the same time. The write requests |

|||

are handled by a sequencer. |

|||

Replication of shared data in general tends to: |

Replication of shared data in general tends to: |

||

* Reduce network traffic |

* Reduce network traffic |

||

| Line 117: | Line 112: | ||

However, preserving coherence and consistency may become more challenging. |

However, preserving coherence and consistency may become more challenging. |

||

==Release and |

==Release and entry consistency== |

||

* Release consistency: when a process exits a critical section, new values of the variables are propagated to all sites. |

* Release consistency: when a process exits a [[critical section]], new values of the variables are propagated to all sites. |

||

* Entry consistency: when a process enters a critical section, it will automatically update the values of the shared variables. |

* Entry consistency: when a process enters a critical section, it will automatically update the values of the shared variables. |

||

** View-based Consistency: it is a variant of Entry Consistency, except the shared variables of a critical section are automatically detected by the system. An implementation of view-based consistency is [http://vodca.otago.ac.nz VODCA] which has comparable performance to [[Message Passing Interface|MPI]] on cluster computers. |

** View-based Consistency: it is a variant of Entry Consistency, except the shared variables of a critical section are automatically detected by the system. An implementation of view-based consistency is [http://vodca.otago.ac.nz VODCA] {{Webarchive|url=https://web.archive.org/web/20160215102157/http://vodca.otago.ac.nz/ |date=2016-02-15 }} which has comparable performance to [[Message Passing Interface|MPI]] on cluster computers. |

||

=== Examples === |

=== Examples === |

||

* [[Kerrighed]] |

* [[Kerrighed]] |

||

* [[OpenSSI]] |

* [[OpenSSI|Open SSI]] |

||

* [[MOSIX]] |

* [[MOSIX]] |

||

* [[TreadMarks]] |

* [[TreadMarks]] |

||

* [http://vodca.otago.ac.nz VODCA] |

* [http://vodca.otago.ac.nz VODCA] {{Webarchive|url=https://web.archive.org/web/20160215102157/http://vodca.otago.ac.nz/ |date=2016-02-15 }} |

||

* [http://dipc-2.sourceforge.net/ DIPC] |

* [http://dipc-2.sourceforge.net/ DIPC] |

||

==See also== |

==See also== |

||

* {{annotated link|Distributed cache}} |

|||

* [[Memory virtualization]] |

|||

* {{annotated link|Memory virtualization}} |

|||

* [[Single-system image]] |

|||

* {{annotated link|Single-system image}} |

|||

* [[Distributed cache]] |

|||

* {{annotated link|Remote direct memory access}} |

|||

==References== |

==References== |

||

| Line 139: | Line 135: | ||

==External links== |

==External links== |

||

* [http://www.sharedcache.com Distributed Shared Cache] |

* [https://web.archive.org/web/20150910102108/http://www.sharedcache.com/ Distributed Shared Cache] |

||

* [http://portal.acm.org/citation.cfm?id=75105&am ''Memory coherence in shared virtual memory systems'' ] by Kai Li, Paul Hudak published in ACM Transactions on Computer Systems, Volume 7 Issue 4, Nov. 1989 |

* [http://portal.acm.org/citation.cfm?id=75105&am ''Memory coherence in shared virtual memory systems'' ] by Kai Li, Paul Hudak published in ACM Transactions on Computer Systems, Volume 7 Issue 4, Nov. 1989 |

||

Latest revision as of 05:46, 4 February 2024

This article needs additional citations for verification. (January 2022) |

In computer science, distributed shared memory (DSM) is a form of memory architecture where physically separated memories can be addressed as a single shared address space. The term "shared" does not mean that there is a single centralized memory, but that the address space is shared—i.e., the same physical address on two processors refers to the same location in memory.[1]: 201 Distributed global address space (DGAS), is a similar term for a wide class of software and hardware implementations, in which each node of a cluster has access to shared memory in addition to each node's private (i.e., not shared) memory.

Übersicht

[edit]

A distributed-memory system, often called a multicomputer, consists of multiple independent processing nodes with local memory modules which is connected by a general interconnection network. Software DSM systems can be implemented in an operating system, or as a programming library and can be thought of as extensions of the underlying virtual memory architecture. When implemented in the operating system, such systems are transparent to the developer; which means that the underlying distributed memory is completely hidden from the users. In contrast, software DSM systems implemented at the library or language level are not transparent and developers usually have to program them differently. However, these systems offer a more portable approach to DSM system implementations. A DSM system implements the shared-memory model on a physically distributed memory system.

DSM can be achieved via software as well as hardware. Hardware examples include cache coherence circuits and network interface controllers. There are three ways of implementing DSM:

- Page-based approach using virtual memory

- Shared-variable approach using routines to access shared variables

- Object-based approach, ideally accessing shared data through object-oriented discipline

Advantages

[edit]- Scales well with a large number of nodes

- Message passing is hidden

- Can handle complex and large databases without replication or sending the data to processes

- Generally cheaper than using a multiprocessor system

- Provides large virtual memory space

- Programs are more portable due to common programming interfaces

- Shield programmers from sending or receiving primitives

Disadvantages

[edit]- Generally slower to access than non-distributed shared memory

- Must provide additional protection against simultaneous accesses to shared data

- May incur a performance penalty

- Little programmer control over actual messages being generated

- Programmers need to understand consistency models to write correct programs

Comparison with message passing

[edit]| Message passing | Distributed shared memory |

|---|---|

| Variables have to be marshalled | Variables are shared directly |

| Cost of communication is obvious | Cost of communication is invisible |

| Processes are protected by having private address space | Processes could cause error by altering data |

| Processes should execute at the same time | Executing the processes may happen with non-overlapping lifetimes |

Software DSM systems also have the flexibility to organize the shared memory region in different ways. The page based approach organizes shared memory into pages of fixed size. In contrast, the object based approach organizes the shared memory region as an abstract space for storing shareable objects of variable sizes. Another commonly seen implementation uses a tuple space, in which the unit of sharing is a tuple.

Shared memory architecture may involve separating memory into shared parts distributed amongst nodes and main memory; or distributing all memory between nodes. A coherence protocol, chosen in accordance with a consistency model, maintains memory coherence.

Directory memory coherence

[edit]Memory coherence is necessary such that the system which organizes the DSM is able to track and maintain the state of data blocks in nodes across the memories comprising the system. A directory is one such mechanism which maintains the state of cache blocks moving around the system.

States

[edit]

A basic DSM will track at least three states among nodes for any given block in the directory.[2] There will be some state to dictate the block as uncached (U), a state to dictate a block as exclusively owned or modified owned (EM), and a state to dictate a block as shared (S). As blocks come into the directory organization, they will transition from U to EM (ownership state) in the initial node. The state can transition to S when other nodes begin reading the block.

There are two primary methods for allowing the system to track where blocks are cached and in what condition across each node. Home-centric request-response uses the home to service requests and drive states, whereas requester-centric allows each node to drive and manage its own requests through the home.

Home-centric request and response

[edit]In a home-centric system, the DSM will avoid having to handle request-response races between nodes by allowing only one transaction to occur at a time until the home node has decided that the transaction is finished—usually when the home has received every responding processor's response to the request. An example of this is Intel's QPI home-source mode.[3] The advantages of this approach are that it's simple to implement but its request-response strategy is slow and buffered due to the home node's limitations.

Requester-centric request and response

[edit]In a requester-centric system, the DSM will allow nodes to talk at will to each other through the home. This means that multiple nodes can attempt to start a transaction, but this requires additional considerations to ensure coherence. For example: when one node is processing a block, if it receives a request for that block from another node it will send a NAck (Negative Acknowledgement) to tell the initiator that the processing node can't fulfill that request right away. An example of this is Intel's QPI snoop-source mode.[3] This approach is fast but it does not naturally prevent race conditions and generates more bus traffic.

Consistency models

[edit]The DSM must follow certain rules to maintain consistency over how read and write order is viewed among nodes, called the system's consistency model.

Suppose we have n processes and Mi memory operations for each process i, and that all the operations are executed sequentially. We can conclude that (M1 + M2 + … + Mn)!/(M1! M2!… Mn!) are possible interleavings of the operations. The issue with this conclusion is determining the correctness of the interleaved operations. Memory coherence for DSM defines which interleavings are permitted.

Replication

[edit]There are two types of replication Algorithms. Read replication and Write replication. In Read replication multiple nodes can read at the same time but only one node can write. In Write replication multiple nodes can read and write at the same time. The write requests are handled by a sequencer. Replication of shared data in general tends to:

- Reduce network traffic

- Promote increased parallelism

- Result in fewer page faults

However, preserving coherence and consistency may become more challenging.

Release and entry consistency

[edit]- Release consistency: when a process exits a critical section, new values of the variables are propagated to all sites.

- Entry consistency: when a process enters a critical section, it will automatically update the values of the shared variables.

- View-based Consistency: it is a variant of Entry Consistency, except the shared variables of a critical section are automatically detected by the system. An implementation of view-based consistency is VODCA Archived 2016-02-15 at the Wayback Machine which has comparable performance to MPI on cluster computers.

Examples

[edit]- Kerrighed

- Open SSI

- MOSIX

- TreadMarks

- VODCA Archived 2016-02-15 at the Wayback Machine

- DIPC

See also

[edit]- Distributed cache – Type of computer cache

- Memory virtualization – Computer science term

- Single-system image – Cluster dedicated operating system

- Remote direct memory access – Low-level hardware direct memory access

References

[edit]- ^ Patterson, David A.; Hennessy, John L. (2006). Computer Architecture: A Quantitative Approach (4th ed.). Burlington, Massachusetts: Morgan Kaufmann. ISBN 978-01-2370490-0.

- ^ Solihin, Yan (2015). Fundamentals of Parallel Multicore Architecture. Boca Raton, Florida: Chapman and Hall/CRC. pp. 339–340. ISBN 9781482211184.

- ^ a b Sorin, Daniel J.; Hill, Mark D.; Wood, David A. (2011). A Primer on Memory Consistency and Cache Coherence. Morgan & Claypool. p. 174. ISBN 978-16-0845564-5.

External links

[edit]- Distributed Shared Cache

- Memory coherence in shared virtual memory systems by Kai Li, Paul Hudak published in ACM Transactions on Computer Systems, Volume 7 Issue 4, Nov. 1989