Study: State Report Cards Need Big Improvements in Tracking COVID Learning Loss

Polikoff: Given the importance of public education and the need for student data, how can states justify doing such a lousy job at informing parents?

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter

Most people who know me would probably say I’m a data and accountability advocate. I’m on the board of the Data Quality Campaign and I’ve written extensively (and favorably) about the role of accountability in promoting educational improvement. But I’ve also been critical of accountability, especially so-called public accountability organized around the idea that parents and advocates will use data on key student outcomes to pressure schools to improve.

When I partnered with the Center on Reinventing Public Education on a report reviewing how transparent state report cards are in reflecting COVID-19 learning loss and recovery, I came in with an open mind. I expected they would contain most of the information we sought and would mostly be pretty usable. I was wrong. I think everyone on our team was incredibly disappointed by many of the state report card websites and their inability to answer our primary questions of interest about the effects of COVID on student outcomes.

Here are four questions from our five analysts about these sites, based on direct quotes from a written interview we all completed after we finished rating the report cards, that we think states should consider moving forward.

Where Is the Data?

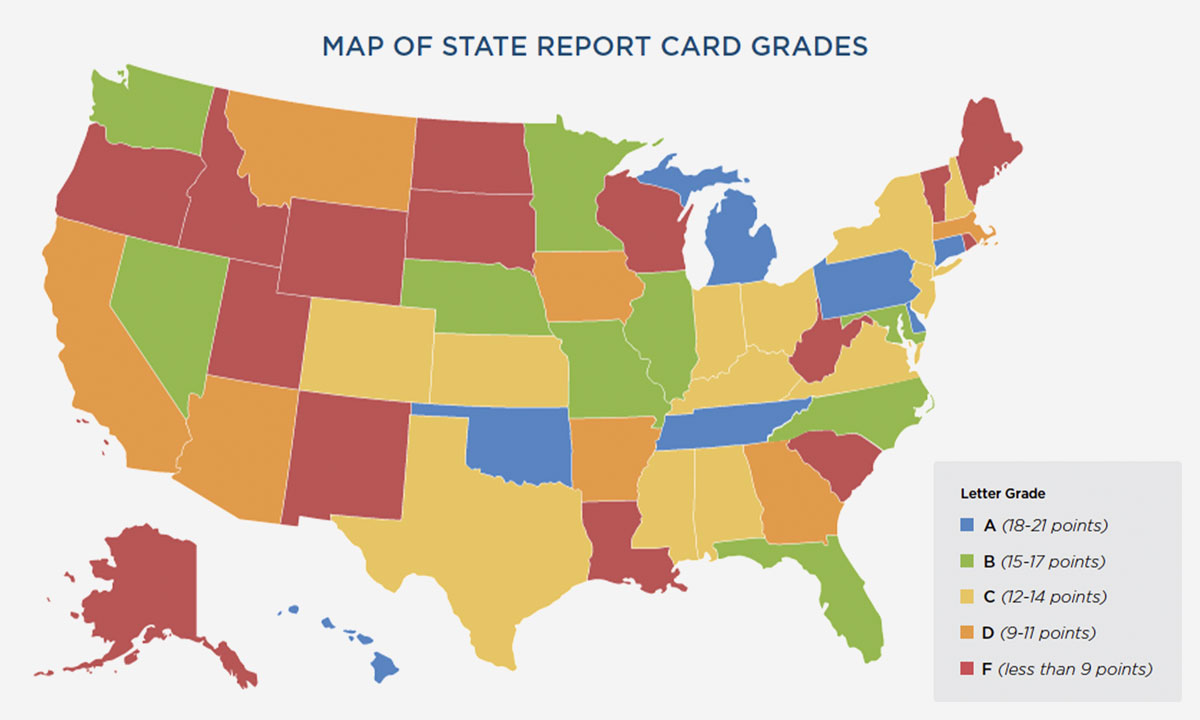

The high-level takeaway from our report: It is extremely difficult on most state report card websites to track longitudinal performance data at the school level going back to before COVID. There are a few exceptions — seven states (Connecticut, Delaware, Hawaii, Michigan, Oklahoma, Pennsylvania and Tennessee) earned an A for having this data available.

But even in many of these better-performing states, there were problems. Many state report cards make it difficult to do things that should be easy. Parents should be able to use the report cards to compare schools they are considering for their children, but in too many places, that is impossible. Advocates should be able to understand, at minimum, the performance of federally mandated student groups, such as children with disabilities and English learners, but many states completely bury these data. Further, report cards often lack other kinds of data that parents might want about available services, like advanced coursework, counseling, even sports and the arts. Overall, the reviewers were disappointed and disheartened.

Are There Really No Best Practices?

We were struck by the variation across the 50 states and the District of Columbia. One reviewer commented, “It was as if 51 different contractors designed these report cards without so much as a single best practice about how they’re supposed to look or function.” Some states leaned on graphs, others on tables. Some websites were easy to navigate, while others were befuddling. Some made subgroup data easy to find; others made it nearly impossible. Some report card websites couldn’t even easily be found through a Google search.

Our analysts also noted the difficulty of simply figuring out the basics of each site. “I was surprised with how different each state report card was and the amount of time it took to familiarize myself with it enough to find the data I was looking for,” one wrote. I felt this acutely as I examined all 51 report cards. It sometimes took two or three 10- to 15-minute visits to feel like I understood the layout of some of the sites.

Overall, we felt that there surely must be some best practices in reporting these kinds of data that states could draw on to improve their report cards. We all wanted easily navigable sites (i.e., that made it clear where to click to find what you wanted) where 1) measures were described in clear language and organized thematically, and 2) users could manipulate the data to answer their most important questions. No site met this bar, though some, such as Idaho, Illinois, Indiana, New Mexico and Oklahoma, were far better than others; Alaska, Louisiana, New York and Vermont

were among 11 states that earned the lowest grade for usability. There could be real value in researchers working with organizations like the Council of Chief State School Officers to lay out some explicit design principles.

Who Is the Intended User?

State report cards are intended principally for parents. Realtors certainly think parents care about school quality; otherwise, they wouldn’t name local elementary schools in their listings. The popularity of sites like GreatSchools proves that at least some demand for school performance data exists. However, if parents are the main intended audience for these reports, it sure doesn’t seem that way. “I could see [parents] spending considerably more time on this compared to our research team,” said one of our researchers. Another described the situation for parents as “frustrating and disempowering,” echoing what the Data Quality Campaign found last year when it asked parents to try to navigate state report cards.

We felt that the report cards were perhaps trying to serve too many audiences and, in the end, not serving any very well. States need to think clearly about whom they’re serving and redesign their report cards from the ground up, working with those groups to ensure usability. In particular, the language of the report cards needs to be clear for people who may not be experts in accountability terminology and education-related acronyms. Even with our levels of expertise, we were sometimes unclear about what different data points meant.

Are State Reports Doomed to be a Compliance Exercise?

A few reviewers thought some state report cards seem like a compliance exercise: States post them because the federal government requires them to, but, ultimately, they’re not concerned about whether these websites are usable. This is a somewhat cynical take, but it’s hard not to feel that way after reviewing some of these sites.

But even if report card sites did start as compliance exercises, they can still serve a positive function in the long run. We don’t want to be Pollyannaish about their potential, but parents clearly care about the effectiveness of the schools they choose for their children, and states clearly can do better at communicating schools’ effectiveness.

We hope this review is a wake-up call for states to consider better reporting of school performance data. While private companies, like GreatSchools, can provide alternatives, states are missing an opportunity to shape parents’ thinking about what matters for school effectiveness, and why. The failure of states to provide high-quality, usable report cards raises a fifth question: Given the importance of effective public education and the apparent need and demand for the data, how can states justify doing such a lousy job at informing parents?

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter

;)