- News

- Business News

- India Business News

- Claude 3.5 Sonnet sets new coding benchmarks

Trending

Claude 3.5 Sonnet sets new coding benchmarks

Representative image

Anthropic’s latest AI model, Claude 3.5 Sonnet, arrived seemingly out of nowhere last week, free to use for everyone, and is now setting new benchmarks in a number of metrics. Most notably, it aced the leader boards in key categories of the LMSYS Chatbot Arena, a benchmark used to measure large language model performance. Specifically, it secured the top position in the ‘Coding Arena’ and ‘Hard Prompts’ categories, beating industry behemoths like GPT-4o and Gemini 1.5 Pro, all while being a lighter, faster model to run.

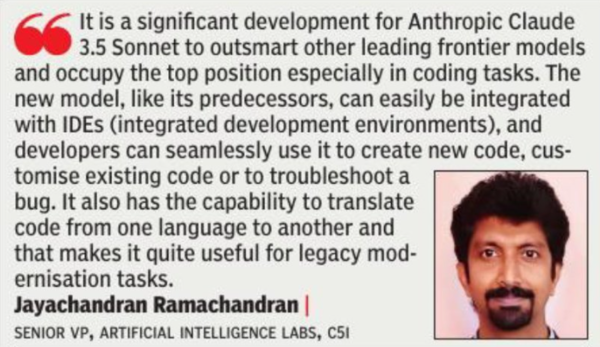

Jayachandran Ramachandran, senior VP of Artificial Intelligence Labs for C5i, says the LMSYS Chatbot Arena is a crowdsourced open benchmarking platform and that users perform various tasks in pair wise comparison of models and provide feedback on which models can satisfactorily provide the best response. “The ranking system is quite transparent, robust, and reliable. In recent times, the top position was occupied by frontier models such as GPT-4 series and Gemini-1.5 series. It is a significant development for Claude 3.5 Sonnet to outsmart other leading frontier models and occupy the top position, especially in coding tasks,” he says.

Saurav Swaroop, co-founder & CTO of financing platform Velocity, which recently launched Vani AI, a GenAIled customer calling solution for financial institutions, says that while GPT-4 has been assisting developers with writing code for some time now, it has been prone to errors and hallucinations. “However, Claude can write production level deployable code now. This will boost developer productivity and the time to build software will keep coming down,” he says.

With its advanced reasoning skills, it’s great at handling multi-step workflows too, which is essential for complex projects, says Sourabh Kumar, senior manager of experience engineering for Publicis Sapient. “Compared to other industry leaders like GPT-4o, Claude 3.5 Sonnet offers faster processing speeds and higher accuracy in complex coding and reasoning tasks. For industries such as finance, where accurate data analysis is critical, or retail, where trend prediction can drive success, integrating Claude 3.5 Sonnet can lead to significant efficiency gains and innovation,” he says.

Claude 3.5 Sonnet can also offer alternative solutions that coders might not have even considered, says Bebi Negi, senior lead data scientist at the Analytics Centre of Excellence for Happiest Minds Technologies.

However, Bebi says coders will still need to check the code created by the model. “While it can create code quickly, it is very important to understand the code and make sure it meets a project’s requirements and standards. Even though benchmarks rate the model at around 90%, the remaining 10% gap underscores the necessity for coders to review the outputs,” she says.

Securing the top spot in the Hard Prompts category was also a big deal, says Bebi. “The Hard Prompts category was all about handling complex tasks and instructions. The model did a great job here too, showing it can understand and generate high-quality content, even when the instructions are quite tricky.”

Jayachandran Ramachandran, senior VP of Artificial Intelligence Labs for C5i, says the LMSYS Chatbot Arena is a crowdsourced open benchmarking platform and that users perform various tasks in pair wise comparison of models and provide feedback on which models can satisfactorily provide the best response. “The ranking system is quite transparent, robust, and reliable. In recent times, the top position was occupied by frontier models such as GPT-4 series and Gemini-1.5 series. It is a significant development for Claude 3.5 Sonnet to outsmart other leading frontier models and occupy the top position, especially in coding tasks,” he says.

Saurav Swaroop, co-founder & CTO of financing platform Velocity, which recently launched Vani AI, a GenAIled customer calling solution for financial institutions, says that while GPT-4 has been assisting developers with writing code for some time now, it has been prone to errors and hallucinations. “However, Claude can write production level deployable code now. This will boost developer productivity and the time to build software will keep coming down,” he says.

With its advanced reasoning skills, it’s great at handling multi-step workflows too, which is essential for complex projects, says Sourabh Kumar, senior manager of experience engineering for Publicis Sapient. “Compared to other industry leaders like GPT-4o, Claude 3.5 Sonnet offers faster processing speeds and higher accuracy in complex coding and reasoning tasks. For industries such as finance, where accurate data analysis is critical, or retail, where trend prediction can drive success, integrating Claude 3.5 Sonnet can lead to significant efficiency gains and innovation,” he says.

Claude 3.5 Sonnet can also offer alternative solutions that coders might not have even considered, says Bebi Negi, senior lead data scientist at the Analytics Centre of Excellence for Happiest Minds Technologies.

However, Bebi says coders will still need to check the code created by the model. “While it can create code quickly, it is very important to understand the code and make sure it meets a project’s requirements and standards. Even though benchmarks rate the model at around 90%, the remaining 10% gap underscores the necessity for coders to review the outputs,” she says.

Securing the top spot in the Hard Prompts category was also a big deal, says Bebi. “The Hard Prompts category was all about handling complex tasks and instructions. The model did a great job here too, showing it can understand and generate high-quality content, even when the instructions are quite tricky.”

End of Article

FOLLOW US ON SOCIAL MEDIA